Every time I’ve spoken with someone about programming in the last month or so, machine learning or artificial intelligence has come up. Let me tell you the reasons why I don’t care a whole lot about it.

1. It’s not going to put me out of a job

At least 75% of the actual job of programming is figuring out what a person means when they ask for something. This is not a problem that a machine can solve for you, for the same reason that it’s harder to build a robot that builds other robots than to just build a robot. Have you had words with Siri lately? Do you think Siri is in a good position to understand your intent, or it’s maybe just searching for command words in a limited lexicon? Do you really think it could find Henry’s stool? Or does it seem more likely that it would get confused and require a human… trained to translate… high-level human-language… into language suitable for… the machine?

2. Machine learning is not creative

Machine learning is great at solving problems where you start with a thousand test cases and have no idea how to write the function to distinguish them. A great deal of the work of “software 2.0” will be a sort of data docent work involving curating data sets of different character. This isn’t going to be an effective or efficient way of solving general-purpose problems any more than it was when Lisp and Prolog claimed they could do it in the 1980s.

By the way, Lisp and Prolog are still fun and cool, and playing with them are a great way to wash the bullshit out of your eyes about AI.

3. More random failure is not a feature

When I ask Siri to set a timer for my tea, it works about 90% of the time. For reference, this means that about once a week, it fails to understand me, and I always say exactly the same thing. This failure rate is completely acceptable for toys like Siri. It’s really not acceptable for most applications we like to use computers for. Is a transfer of money involved? It seems unlikely to me that anyone who works for a living will accept a 10% chance that the money either doesn’t get sent or doesn’t arrive. It seems more likely to me you want the most straightforward code path you can imagine. A big detour through an opaque neural network for grins feels like a bad idea.

The majority of software is actually in this situation. You don’t want a 10% chance your web service does not get called. You don’t want a 10% chance that your log message does not get written or reported. You don’t want a 10% chance that the email to the user does not get sent.

When are you content with a high probability of nothing happening? When you’re making something that is essentially a toy, or perhaps, doing a task where no one can really tell if you’re doing anything at all, which brings us to…

4. ML is painstakingly squeezing the last drop of profit out of a failing business model

The nice thing about advertising is that someone can pay you $500 to do it, and then if nothing happens, you can just shrug and say “try again.” Google wants you to believe that you’re accomplishing something, but how many people out there actually know that the money they spend on Google is giving them a positive return?

Let’s play a game: if people who believe they need your product stop buying your product, and you’re left only with the customers who actually do need your product, how does your future look? I think if your primary product is advertising, you’re probably a lot more fucked than someone else who does something more tangible. To be blunt, if all fads were cancelled tomorrow, Facebook and Google would be done for, but Wolfram would get maybe a little blip. You want to work for the blip companies.

5. ML squeezes the last drop from the lemon

ML is a great tool for handling that last fuzzy piece of an interesting application. As an ingredient, it’s more like truffles than flour, potatoes or onions. It’s an expensive, complex tool that solves very specific problems in very specific circumstances.

Unfortunately, there are a few large companies that stand to make significantly more money if either A) machine learning makes significant advances in the failing advertising market, or (more likely) B) machine learning specialists glut the market and suddenly earn more like what normal programmers earn.

Does that change your understanding of why Google and Facebook might be trying to make sure this technology is as widely available and understood as possible? Bear in mind that unless you have massive data sets, machine learning is basically useless. A startup with a proprietary machine learning algorithm that beat the socks off anything Facebook or Google has would be greatly hampered by lack of data—and Facebook and Google are definitely able to compensate for any algorithmic shortcoming either by throwing a ton of compute at the problem or by throwing a ton of computational “manual labor” hacks at the problem.

6. Something else is going to end the profession of programming first

Of the 25% of my job that actually requires programming, probably about 90% of it is in the service of things users simply can’t detect. Code quality. Testing. Following the latest UI fads. Worry about the maintenance programmer. Design.

Here’s a frightening question for any programmer. How much of your job disappears if users don’t mind if the app is ugly and are able to do basic programming on their own? I’m not being facetious. If we were really honest, I think we would admit that A) most of what we do is done for reasons that ultimately amount to fashion, and B) a more computationally-literate, less faddish user base requires dramatically less effort to support.

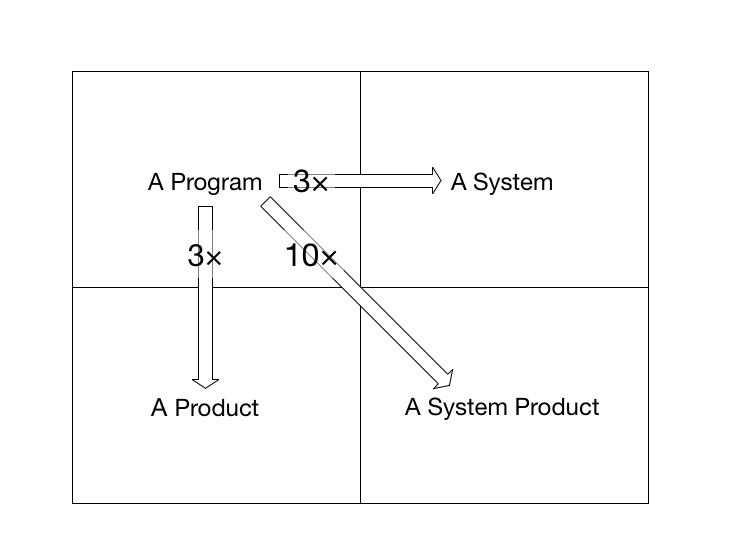

Let’s recall Fred Brook’s program-system-product grid:

What you’re seeing there is the admission of our industry that solving a problem costs 1/10th of what you’re paying, because you want a nice UI.

All it would take to decimate the population of programmers would be for people to get computer literate and not be so picky about the colors. This sounds to me like something that happens when crafts get industrialized: the faddish handcraftedness is replaced by easier-to-scale, lower quality work.

I think the emphasis on machine learning is looking at Fabergé eggs and saying “all handcrafts are going to be like this” simply because it’s very lucrative for Fabergé.

Nope.

Your software is not going to be that cool. It’s going to come in beige and all the ports are going to be standardized. But, it will actually do real work that real people need done, and that is way better than making useless decorative eggs for the new Tsars.

Conclusion

Machine learning is boring.